A corollary discharge circuit in human speech

Amirhossein Kahlilian-Gourtani, Ran Wang, Xupeng Chen, Leyao Yu, Patricia Dugan, Daniel Friedman, Werner Doyle, Orrin Devinsky, Yao Wang and Adeen Flinker

When we vocalize, our brain distinguishes self-generated sounds from external ones. A corollary discharge signal supports this function in animals; however, in humans, its exact origin and temporal dynamics remain unknown. We report electrocorticographic recordings in neurosurgical patients and a connectivity analysis framework based on Granger causality that reveals major neural communications. We find a reproducible source for corollary discharge across multiple speech production paradigms localized to the ventral speech motor cortex before speech articulation. The uncovered discharge predicts the degree of auditory cortex suppression during speech, its well-documented consequence. These results reveal the human corollary discharge source and timing with far-reaching implication for speech motor-control as well as auditory hallucinations in human psychosis.

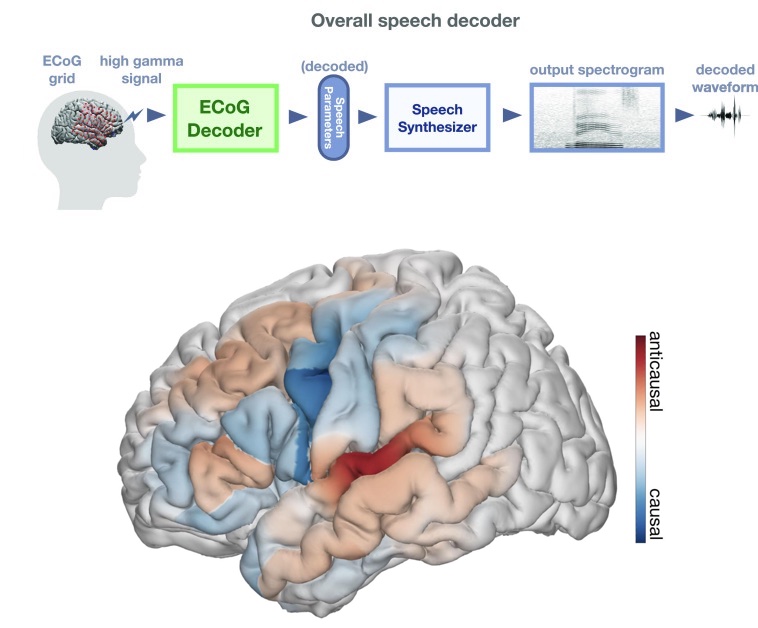

A neural speech decoding framework leveraging deep learning and speech synthesis

Xupeng Chen, Ran Wang, Amirhossein Kahlilian-Gourtani, Leyao Yu, Patricia Dugan, Daniel Friedman, Werner Doyle, Orrin Devinsky, Yao Wang and Adeen Flinker

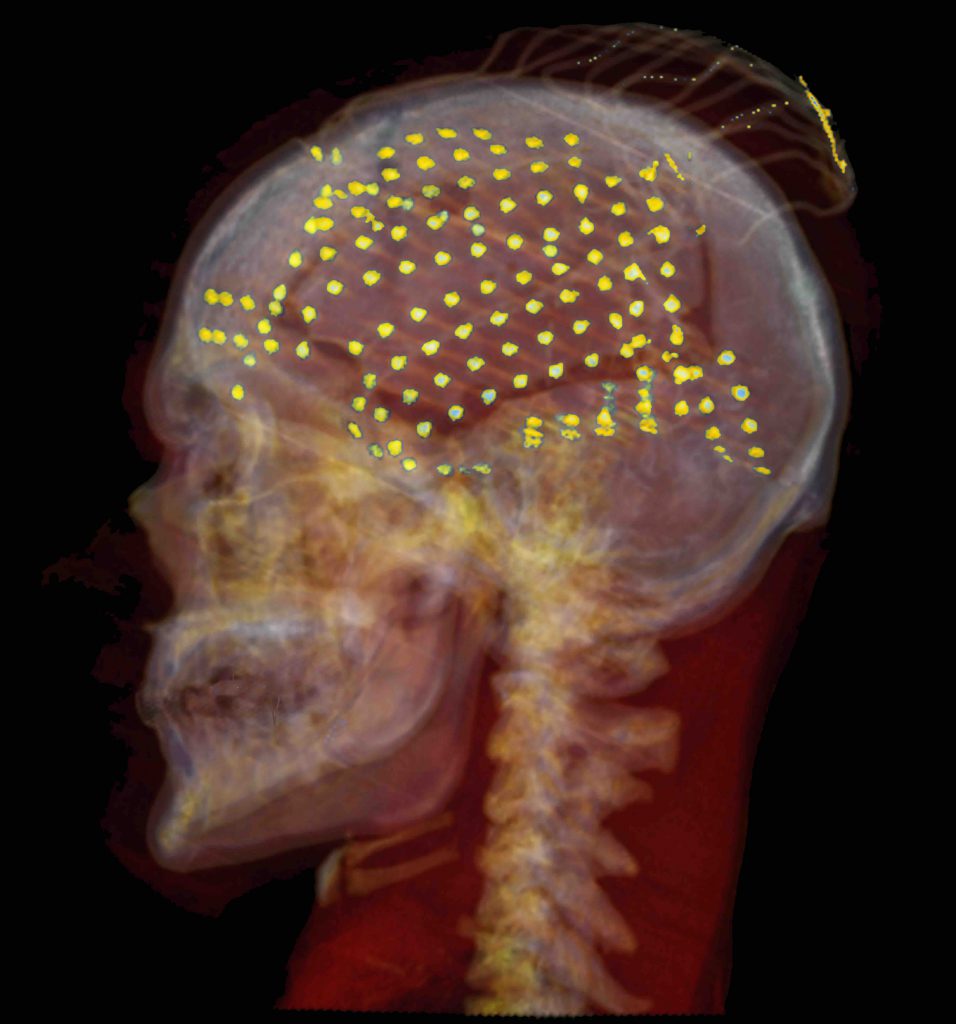

Decoding human speech from neural signals is essential for brain–computer interface (BCI) technologies that aim to restore speech in populations with neurological deficits. However, it remains a highly challenging task, compounded by the scarce availability of neural signals with corresponding speech, data complexity and high dimensionality. Here we present a novel deep learning-based neural speech decoding framework that includes an ECoG decoder that translates electrocorticographic (ECoG) signals from the cortex into interpretable speech parameters and a novel differentiable speech synthesizer that maps speech parameters to spectrograms. We have developed a companion speech-to-speech auto-encoder consisting of a speech encoder and the same speech synthesizer to generate reference speech parameters to facilitate the ECoG decoder training. This framework generates natural-sounding speech and is highly reproducible across a cohort of 48 participants. Our experimental results show that our models can decode speech with high correlation, even when limited to only causal operations, which is necessary for adoption by real-time neural prostheses. Finally, we successfully decode speech in participants with either left or right hemisphere coverage, which could lead to speech prostheses in patients with deficits resulting from left hemisphere damage.

Timing and location of speech errors induced by direct cortical stimulation

Heather Kabakoff, Leyao Yu, Patricia Dugan, Daniel Friedman, Werner Doyle, Orrin Devinsky, Adeen Flinker

Cortical regions supporting speech production are commonly established using neuroimaging techniques in both research and clinical settings. However, for neurosurgical purposes, structural function is routinely mapped peri-operatively using direct electrocortical stimulation. While this method is the gold standard for identification of eloquent cortical regions to preserve in neurosurgical patients, there is lack of specificity of the actual underlying cognitive processes being interrupted. To address this, we propose mapping the temporal dynamics of speech arrest across peri-sylvian cortices by quantifying the latency between stimulation and speech deficits. In doing so, we are able to substantiate hypotheses about distinct region-specific functional roles (e.g. planning versus motor execution). In this retrospective observational study, we analysed 20 patients (12 female; age range 14–43) with refractory epilepsy who underwent continuous extra-operative intracranial EEG monitoring of an automatic speech task during clinical bedside language mapping. Latency to speech arrest was calculated as time from stimulation onset to speech arrest onset, controlling for individual speech rate. Most instances of motor-based arrest (87.5% of 96 instances) were in sensorimotor cortex with mid-range latencies to speech arrest with a distributional peak at 0.47 s. Speech arrest occurred in numerous regions, with relatively short latencies in supramarginal gyrus (0.46 s), superior temporal gyrus (0.51 s) and middle temporal gyrus (0.54 s), followed by relatively long latencies in sensorimotor cortex (0.72 s) and especially long latencies in inferior frontal gyrus (0.95 s). Non-parametric testing for speech arrest revealed that region predicted latency; latencies in supramarginal gyrus and in superior temporal gyrus were shorter than in sensorimotor cortex and in inferior frontal gyrus. Sensorimotor cortex is primarily responsible for motor-based arrest. Latencies to speech arrest in supramarginal gyrus and superior temporal gyrus (and to a lesser extent middle temporal gyrus) align with latencies to motor-based arrest in sensorimotor cortex. This pattern of relatively quick cessation of speech suggests that stimulating these regions interferes with the outgoing motor execution. In contrast, the latencies to speech arrest in inferior frontal gyrus and in ventral regions of sensorimotor cortex were significantly longer than those in temporoparietal regions. Longer latencies in the more frontal areas (including inferior frontal gyrus and ventral areas of precentral gyrus and postcentral gyrus) suggest that stimulating these areas interrupts a higher-level speech production process involved in planning. These results implicate the ventral specialization of sensorimotor cortex (including both precentral and postcentral gyri) for speech planning above and beyond motor execution.

Speech-induced suppression and vocal feedback sensitivity in human cortex

Muge Ozker, Leyao Yu, Patricia Dugan, Daniel Friedman, Werner Doyle, Orrin Devinsky, Adeen Flinker

Across the animal kingdom, neural responses in the auditory cortex are suppressed during vocalization, and humans are no exception. A common hypothesis is that suppression increases sensitivity to auditory feedback, enabling the detection of vocalization errors. This hypothesis has been previously confirmed in non-human primates, however a direct link between auditory suppression and sensitivity in human speech monitoring remains elusive. To address this issue, we obtained intracranial electroencephalography (iEEG) recordings from 35 neurosurgical participants during speech production. We first characterized the detailed topography of auditory suppression, which varied across superior temporal gyrus (STG). Next, we performed a delayed auditory feedback (DAF) task to determine whether the suppressed sites were also sensitive to auditory feedback alterations. Indeed, overlapping sites showed enhanced responses to feedback, indicating sensitivity. Importantly, there was a strong correlation between the degree of auditory suppression and feedback sensitivity, suggesting suppression might be a key mechanism that underlies speech monitoring. Further, we found that when participants produced speech with simultaneous auditory feedback, posterior STG was selectively activated if participants were engaged in a DAF paradigm, suggesting that increased attentional load can modulate auditory feedback sensitivity.

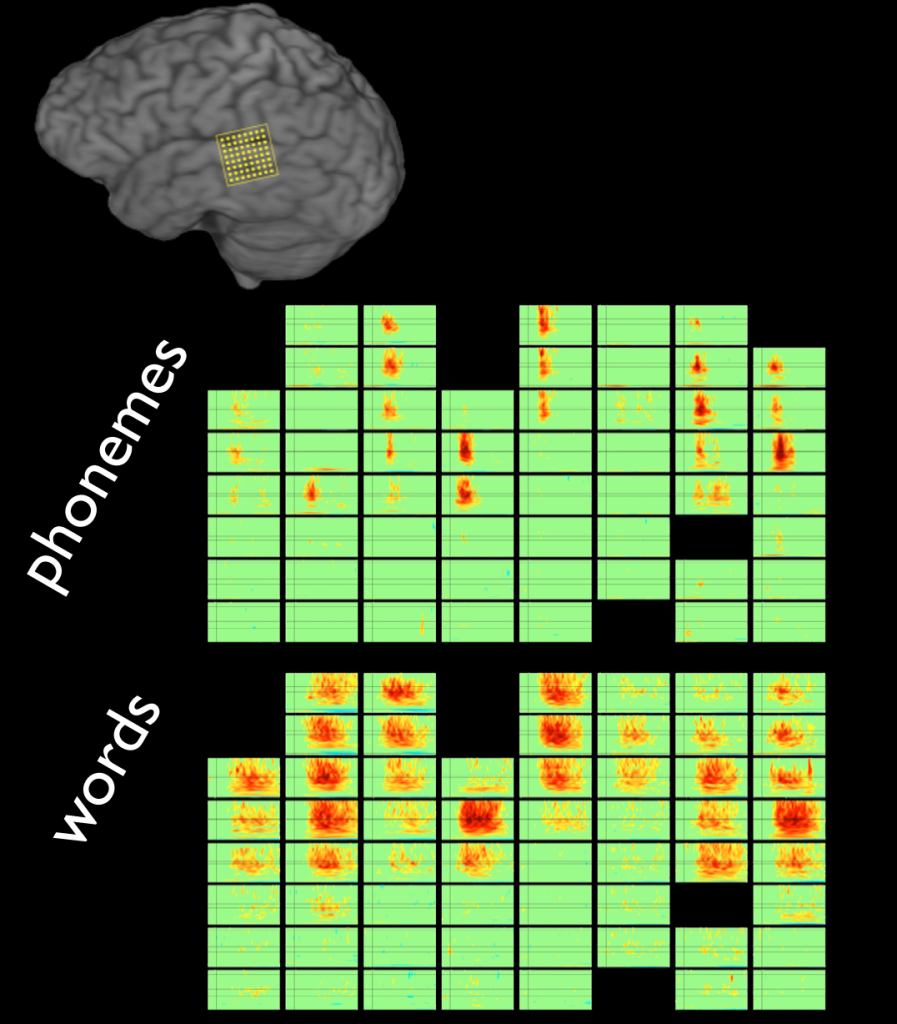

Distributed feedforward and feedback cortical processing supports human speech production

Ran Wang, Xupeng Chen, Amirhossein Kahlilian-Gourtani, Leyao Yu, Patricia Dugan, Daniel Friedman, Werner Doyle, Orrin Devinsky, Yao Wang and Adeen Flinker

Speech production is a complex human function requiring continuous feedforward commands together with reafferent feedback processing. These processes are carried out by distinct frontal and temporal cortical networks, but the degree and timing of their recruitment and dynamics remain poorly understood. We present a deep learning architecture that translates neural signals recorded directly from the cortex to an interpretable representational space that can reconstruct speech. We leverage learned decoding networks to disentangle feedforward vs. feedback processing. Unlike prevailing models, we find a mixed cortical architecture in which frontal and temporal networks each process both feedforward and feedback information in tandem. We elucidate the timing of feedforward and feedback–related processing by quantifying the derived receptive fields. Our approach provides evidence for a surprisingly mixed cortical architecture of speech circuitry together with decoding advances that have important implications for neural prosthetics

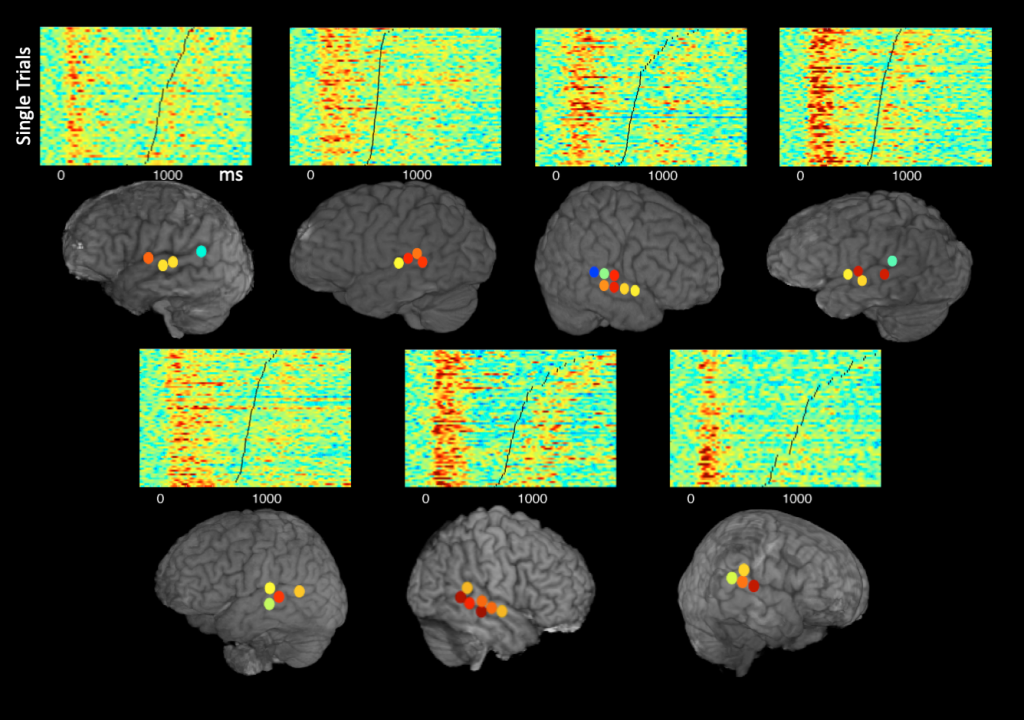

A cortical network processes auditory error signals during human speech production to maintain fluency

Müge Özker, Werner K Doyle, Orrin Devinsky, Adeen Flinker

Hearing one’s own voice is critical for fluent speech production as it allows for the detection and correction of vocalization errors in real time. This behavior known as the auditory feedback control of speech is impaired in various neurological disorders ranging from stuttering to aphasia; however, the underlying neural mechanisms are still poorly understood. Computational models of speech motor control suggest that, during speech production, the brain uses an efference copy of the motor command to generate an internal estimate of the speech output. When actual feedback differs from this internal estimate, an error signal is generated to correct the internal estimate and update necessary motor commands to produce intended speech. We were able to localize the auditory error signal using electrocorticographic recordings from neurosurgical participants during a delayed auditory feedback (DAF) paradigm. In this task, participants hear their voice with a time delay as they produced words and sentences (similar to an echo on a conference call), which is well known to disrupt fluency by causing slow and stutter-like speech in humans. We observed a significant response enhancement in auditory cortex that scaled with the duration of feedback delay, indicating an auditory speech error signal. Immediately following auditory cortex, dorsal precentral gyrus (dPreCG), a region that has not been implicated in auditory feedback processing before, exhibited a markedly similar response enhancement, suggesting a tight coupling between the 2 regions. Critically, response enhancement in dPreCG occurred only during articulation of long utterances due to a continuous mismatch between produced speech and reafferent feedback. These results suggest that dPreCG plays an essential role in processing auditory error signals during speech production to maintain fluency.

Neural correlates of sign language production revealed by electrocorticography

Jennifer Shum, Lora Fanda, Patricia Dugan, Werner K Doyle, Orrin Devinsky, Adeen Flinker

Objective: The combined spatiotemporal dynamics underlying sign language production remains largely unknown. To investigate these dynamics as compared to speech production we utilized intracranial electrocorticography during a battery of language tasks.

Methods: We report a unique case of direct cortical surface recordings obtained from a neurosurgical patient with intact hearing and bilingual in English and American Sign Language. We designed a battery of cognitive tasks to capture multiple modalities of language processing and production.

Results: We identified two spatially distinct cortical networks: ventral for speech and dorsal for sign production. Sign production recruited peri-rolandic, parietal and posterior temporal regions, while speech production recruited frontal, peri-sylvian and peri-rolandic regions. Electrical cortical stimulation confirmed this spatial segregation, identifying mouth areas for speech production and limb areas for sign production. The temporal dynamics revealed superior parietal cortex activity immediately before sign production, suggesting its role in planning and producing sign language.

Conclusions: Our findings reveal a distinct network for sign language and detail the temporal propagation supporting sign production.

Spectrotemporal modulation provides a unifying framework for auditory cortical asymmetries

Redefining the role of Broca’s area in speech

Single-trial speech suppression of auditory cortex activity in humans